Ctrl+AI+Reg - 29 January 2024

Your shortcut to AI regulation, law and policy updates around the world.

AI Regulation Updates

Updates to: Italy, New Zealand

Europe

🇮🇹 [26 January 2024] Italy fines first city for privacy breaches in use of AI: Italy's privacy watchdog has fined the northern city of Trento €50,000 for breaking data protection rules in the way it used AI in street surveillance projects. Trento is the first local administration in Italy to be sanctioned by the regulator over the use of data from AI tools.

Oceania

🇳🇿 [29 January 2024] New Zealand government looking to get up to speed on AI regulation: Science, Technology and Innovation Minister Judith Collins revealed to a news outlet (Newstalk ZB) that the New Zealand Government will restart a parliamentary caucus on the topic of AI regulation, including revising a draft AI framework from the Department of Internal Affairs.

Previous update

Opinions

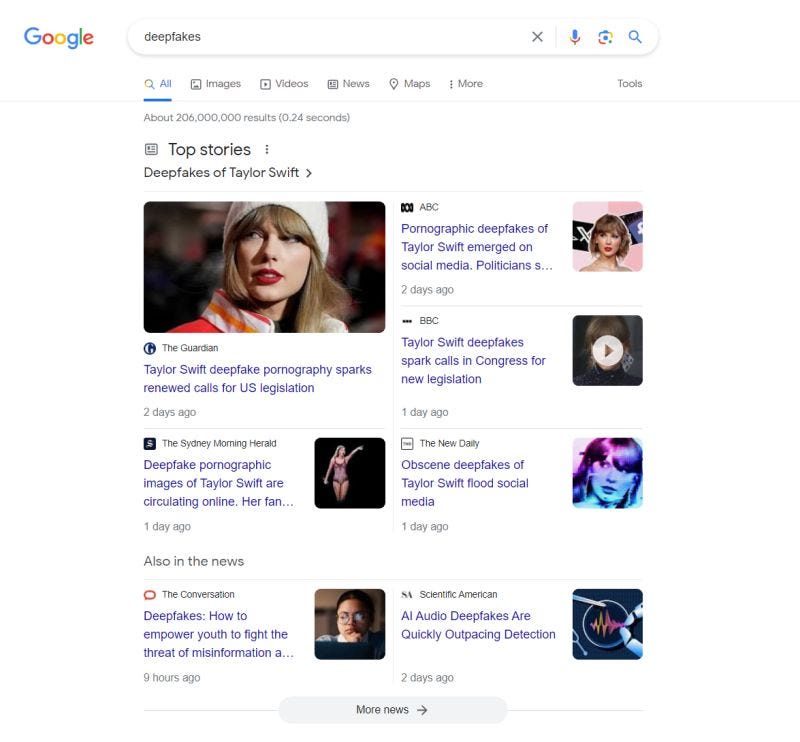

See Linkedin Post about my thoughts on deepfake regulation following the Taylor Swift deepfake incident.

The explicit deepfakes of Taylor Swift dominated headlines last week, and inspired more public discussion on "deepfake regulation".

But how would "deepfake regulation" work?

Let's first explore the 'lifecycle' of deepfake which generally involves 3 steps:

1️⃣ Deepfake generator is developed and launched to the public.

2️⃣ Bad actor uses deepfake generator to create nefarious deepfake.

3️⃣ Deepfake is posted and circulated online.

Step 3 is generally already covered under online safety, fraud, IP, defamation and criminal laws. While these laws might need reform, deepfake specific regulation might not be needed here. I previously discussed deepfakes under existing AU laws (following the Tom Hanks deepfake incident): https://lnkd.in/g4MWSmUj

On the other hand, regulating steps 1 & 2 is tricky:

(a) it's hard for model providers to guarantee their models won't produce bad deepfakes (as models tend to be black boxes);

(b) even if the model is designed to not create bad deepfakes, a bad actor can still manually photoshop an innocuous deepfake into a bad one; and

(c) there's a broader debate of whether AI should be specifically regulated.

Current regulatory examples show how tricky it is to regulate step 1 & 2 (see my AI regulation tracker: https://lnkd.in/gcKRYUAx).

🇨🇳 China has an existing law on "Deep Synthesis Technology" (DST) which prohibits creating deepfakes in certain use cases and requires deepfakes to be watermarked as AI generated. China has another law on Generative AI which regulates the development, moderation and assessment of models. So China represents "step 1" regulation, though I have previously raised questions including:

❓ the ambiguity on the term "provider" (提供者) which doesn't distinguish different stakeholders down the AI chain.

❓ the DST law places watermarking requirements on the "provider", but not the "user" (art 17). It's unclear how watermarking applies when the user edits and passes on deepfake to another user.

❓ the prohibited deepfake use cases focus on protecting societal public interests rather than individual interests (e.g. personal reputation) (art 6).

🇺🇸 The US has a draft bill called the "No AI FRAUD Act" which prohibits making a deepfake of someone without their consent. The US approach thus represents "step 2", relying on consent regime to protect individuals. But the bill:

❓ doesn't specify what amounts to "consent" (i.e. open to the courts to interpret). For this bill to work, consent should have a standard that works in today's digital world - i.e. not too easily satisfied (e.g. ticking the box of clickwrapper terms) but also not too onerous on industry.

❓ provides a "first amendment" (i.e. free speech) defence but on a vague criteria.

Given how steps 1 & 2 are tricky, maybe let's prioritise getting the laws on step 3 right 👍

Want more?

Global AI Regulation Tracker (an interactive world map that tracks AI regulations around the world).

Global Tech Law News Hub (a news hub that curates the latest tech law news).